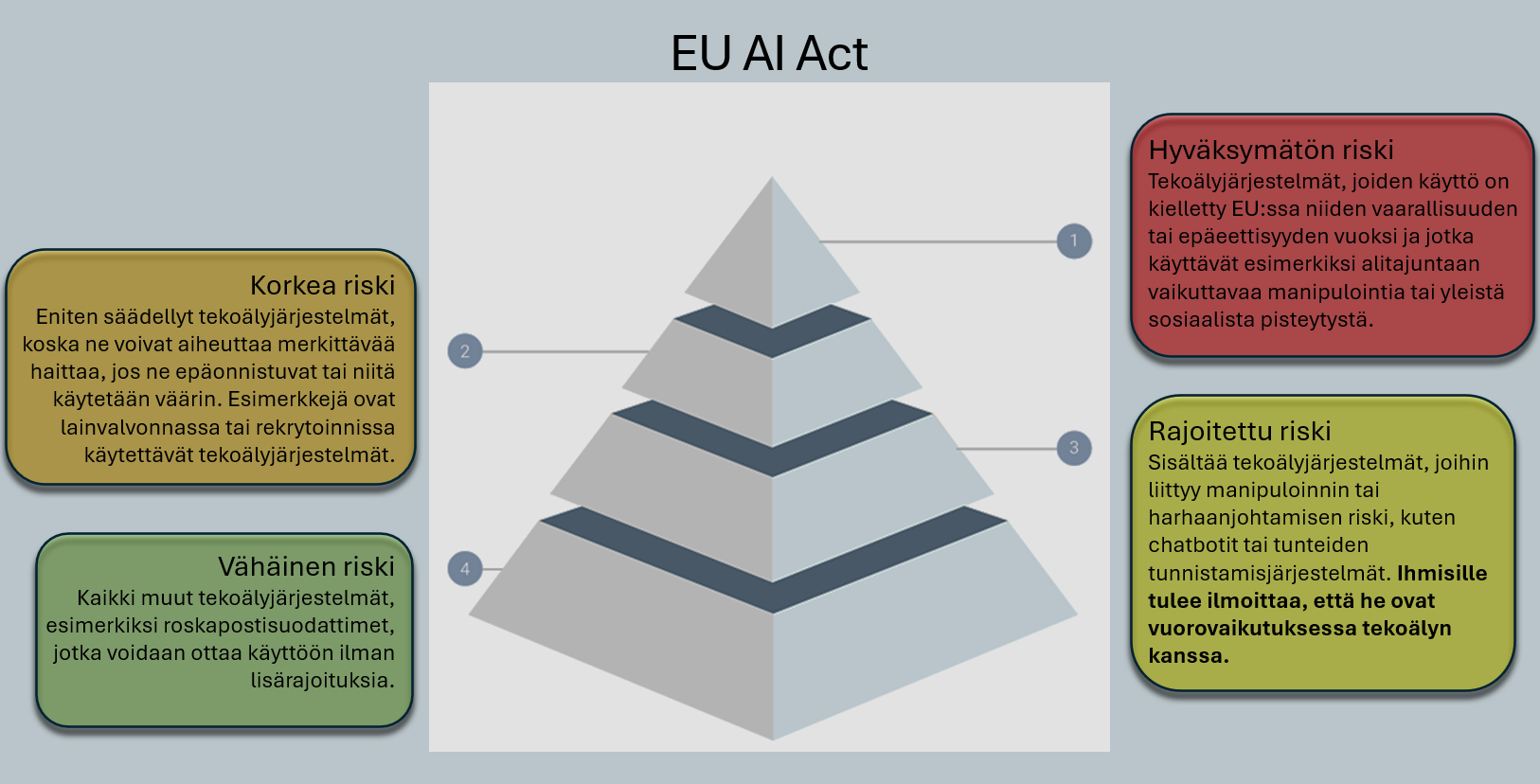

Risk levels illustrate the different regulatory categories of the AI Act.

AI Legislation in 2025 – EU and Beyond

Artificial Intelligence (AI) has made tremendous strides in recent years, impacting every aspect of society: healthcare, finance, transportation, and daily life. This rapid development has brought new risks that can, at worst, threaten both individual rights and societal stability. This is why legislation has become central: how do we ensure that AI is developed and used safely, ethically, and with respect for human rights?

In August 2024, the European Union published its long-awaited AI Regulation (AI Act). Following publication, a transition period began, allowing companies and other stakeholders time to adapt to the new regulations. When the first provisions of the AI Act come into force on February 2, 2025, they will introduce the first binding rules for AI use. Why is this important?

Simply put: if a system is used in a way that creates an "unacceptable risk" – for example, endangering safety or human rights – the consequences can be severe. In the worst cases, fines can reach €35 million or 7% of a company's global annual revenue. It remains to be seen how the EU will enforce and implement these rules in practice, and how the legal system will apply them in different situations. Clearly, the regulation aims to protect citizens and society, but it also requires companies to exercise more rigorous risk management and oversight.

The EU as a Pioneer – Or Too Eager a Regulator?

The EU has previously proven itself a pioneer in technology regulation: the GDPR (General Data Protection Regulation) created a solid foundation for privacy protection. However, the new rules immediately attracted criticism: many small and medium-sized enterprises felt that strict requirements hindered rapid innovation and created a heavy administrative burden. On the other hand, civil society organizations viewed GDPR as an important step in protecting privacy.

Now, with the AI Act, the EU again aims to set an international standard for how advanced algorithms and learning systems should be designed, tested, and deployed. While the EU is praised for its proactive approach, there are also critics who fear that regulation will slow Europe's growing AI ecosystem and worsen competition with regions like the United States and Asia.

EU legislation places special emphasis on the distribution of responsibility between AI system developers and users. In practice, this means developers must ensure system safety and transparency throughout its lifecycle, while users are obligated to follow instructions and ensure that AI systems are not misused or used contrary to their intended purpose. Critics note, however, that distributed responsibility can lead to situations where no one bears full accountability.

Four Risk Levels

The new regulation classifies AI applications into four risk levels:

-

Unacceptable Risks

These are AI applications that threaten safety, livelihoods, or human rights. Examples include government-operated social scoring systems or AI-powered toys that encourage dangerous behavior. Such applications are completely prohibited, and their development or use can lead to the most severe sanctions. -

High-Risk Applications

This category includes systems related to healthcare, critical infrastructure, and employee management. These face strict requirements, such as conducting risk assessments, ensuring data quality, and continuous monitoring. Companies must also document system operations and ensure they meet safety requirements. -

Limited Risk Applications

This group includes systems with limited impact on users. For example, chatbots and other systems that interact with users fall into this category. These systems face transparency requirements: users must know when they're interacting with AI. -

Minimal Risk Applications

Most AI systems fall into this category, which has no special regulatory requirements. Examples include spam filters or video games.

Critics point out that defining risk levels can be complex and subject to interpretation. For example, what makes a system "high-risk," and who ultimately decides? There are also fears that especially SMEs might direct investments away from Europe, creating a "regulatory escape" to other countries.

United States: Market-Driven Regulation

The United States approaches AI regulation quite differently. As of 2025, there is no comprehensive AI law comparable to the EU's, but sector-specific regulations have been implemented in areas such as healthcare and fintech. The role of markets remains central, and many companies, like Microsoft and Google, are drafting their own internal ethical guidelines.

However, it's evident that the EU's AI Act is generating interest in the United States: observers are closely watching what impact stricter EU regulation will have on AI development and competition. Some analysts suggest that if the AI Act proves successful, the United States might learn from it – while others predict the opposite: lighter regulation to ensure competitiveness and investment-friendliness.

Asia: Different Strategies in China and Japan

In China, AI is guided by state leadership and strategic objectives such as economic growth and international competitiveness. By 2025, the country has published its own guidelines for AI developers, focusing on security and social stability. State oversight is quite comprehensive, and critical discussion of AI ethics is not as open as in Western countries.

In Japan, cooperation between businesses, government, and academia is close, but regulation is softer than in the EU. There, special attention is paid to applying AI to the needs of an aging population: examples include robotics and healthcare, areas where Japan is recognized as a world leader.

International Standards on the Horizon

AI development is inevitably global, which emphasizes the need for international cooperation. Under UN auspices, there have been discussions about global AI rules, but no binding agreement exists yet.

However, the EU's AI Act could prove to be a model similar to GDPR, as many countries are now considering how to balance innovation promotion and risk management. If the EU model proves successful, other states might follow suit – as happened with the data protection regulation. At the same time, it's important to remember that GDPR continues to face criticism in many circles for excessive administration, so global harmonization is not a given.

Questions About Responsibility Distribution

AI systems are rarely developed by a single entity. Often involved are subcontractors, cloud service providers, and data platforms, all of which play a role in the final product's safety and functionality. The AI Act requires companies to provide more detailed documentation and oversight so they can demonstrate compliance with legal requirements – and address potential problems in time.

- Contract Models and Role Clarity: Responsibilities must be clearly divided: who bears legal liability if a system malfunctions or causes harm?

- Transparency Requirements: All parties must be able to demonstrate how information is collected, used, and protected. Potential algorithmic biases and discriminatory effects must also be identified in advance.

What Does This Mean in Practice?

The purpose of the EU's AI Act is not to stifle innovation, but to ensure that AI is used responsibly and with respect for fundamental human rights. This means, for example, that companies must invest in:

- Risk Management and Assessment

- Non-Discrimination

- Transparency

- Fairness

Before deploying a system, preliminary research on potential risks must be conducted, and after deployment, the system must be continuously monitored. A critical question is: who conducts the assessment and by what criteria?

Algorithms must not place groups of people in unequal positions based on, for example, gender, age, or ethnic background. However, many developers find ensuring non-discrimination challenging in practice, especially when data collection contains biases.

Users have the right to know when they're interacting with AI – and how data is used in decision-making. For some actors, this may mean significant changes to current practices and communication methods.

The goal is for AI to benefit all of society, not just a small group of developers or large corporations. Critics note, however, that effective oversight is difficult to organize, and regulatory interpretation may vary by country.

Conclusion

2025 is a significant turning point for AI legislation. The EU's new regulation serves as a global pathfinder, and other economic regions are watching closely. International regulatory harmonization may take time, but AI's transformative impact is already visible across sectors.

At its best, AI can improve people's lives – for example, by speeding up medical diagnostics, streamlining everyday services, and creating entirely new jobs. At the same time, we must ensure that technology isn't misused or creates new, invisible risk structures in society. As with GDPR, the AI Act will certainly receive both praise and criticism, so it will be fascinating to see how regulation and innovation balance out in the coming years.

Following legislation is therefore in the interest of every organization and citizen. Knowledge and open discussion help ensure that AI's future is safe, fair, and accessible to all.

© 2025 AIvoille.com

Sources

- European Commission: Europe's approach to Artificial Intelligence

- European Commission: Proposal for a Regulation on Artificial Intelligence (AI Act)

- GDPR-info: Key Points of Data Protection Regulation

- European Data Protection Board: EDPB